Impression

Institutes applying for the NAAC are mostly innocent, and a bit laid back-lackadaisical too . They go by what is given to them in the manual and rarely raise critical issues. While preparing for the accreditation response, however, a large number of professors leading the system get frustrated with the kind of metrics they face. Here is a brief impression of what bedevils the NAAC accreditation system:

(a) General University Manual

- Freedom is given to institutes to opt out up to 5% of the total weight (50 marks) from ‘non-applicable’ metrics. Since ‘non-applicable’ are not specified, it is observed that DVV doesn’t mind institutes opting out even from ‘applicable’ metrics as long as the same are not part of the prohibited list. Therefore, make hay while sun shines.

- AICTE by its Gazette notification in the year 2014 makes NBA applicable to even all the professional technical education programs of the constituent colleges of a university. However, in practice, the UGC simply insists upon universities to get accredited in NAAC. It maintains a stoic silence on NBA. So, what do you make out of it?

- For the purpose of NAAC accreditation, all programs, such as Engineering, Management, Law, Health Sciences etc. have been mixed. Whereas, UGC has provided freedom to the university to design, review and revise its curriculum periodically, however, in practice, the curriculum and minimum standards of programs such as health sciences (part of the same university) are tightly regulated by their ‘regulatory councils’ who exercise complete dominance on the above freedom. This dichotomy adversely affects a multi-disciplinary university, particularly with health sciences in failing to score requisite marks on the metrics of revision of curriculum, addition of courses, flexibility etc. for no fault of theirs. Ironically, the health sciences ‘regulatory councils’ rarely review their syllabus.

(b) Health Sciences University Manual-tough challenges

- It is not possible to opt out from any of the inapplicable metrics whose response is in the hands of the regulatory councils, and not the university.

- Enhanced importance of peer team visit is still low at 35% weight as against 30% in case of General Manual.

- It is well-known that activity on the research papers and books is least undertaken by the faculty of private institutes of health sciences. Larger emphasis is on ‘live’ case studies, new scientific and technology know-how, physical participation in labs and operations, followed by teaching engagement. However, much against the grain, NAAC lays emphasis on research papers and books.

- Inapplicable/partly applicable metrics are big issue:

1.1.1 (Ql) : (20 marks)

(a) Due to ‘regulatory councils’ of health sciences, assuming role of designing and revising curriculum, add/delete a course, universities can at best only suggest a few changes or introduce ‘value-added courses’, not part of the curriculum/syllabus. This metric is therefore, applicable only to engineering, management and other disciplines where university has a role to revise the curriculum. Ideally, the marks of this metric be proportionally enhanced based on the response in the disciplines, where university exercises freedom to form/revise a curriculum.

(b) Health Science Universities, hitherto before, were not expected to get immersed into the ‘outcome-based education’ process of PEOs, POs, COs etc. Essentially, this system was imported from ABET (USA) for the engineering programs and formed an essential part of the ‘Washington Accord’ as regards UG engineering programs only. It was adopted in India by the NBA. To the best of my knowledge, nowhere in the world, this process has been adopted by the Health Sciences or Management programs, and that raises a question over its applicability.

Well, if this system has to be imposed on the institutes with health sciences, it would require, at least 3 to 5 years to get it fully implemented to a level of ‘analysis and attainment’ of POs . It is an established practice that COs assessment n’ evaluation, relevant to the specific POs is spanned over the entire period of a batch. In the final analysis, an assessment and evaluation of a batch emerges only after the OBE-adopting batch passes out. Will someone in NAAC understand this and limit their expectations from the institutes? It is important to note that even after POs attainments are arrived it, the ultimate purpose of carrying out changes in curriculum will not be fully met at the university level since the control to do so will continue to be vested with the regulatory councils.

(c) Here are a few specific issues to ponder upon by the NAAC for health sciences:

1.1.2 (Qn) (15 marks) : Syllabus revision in the last 5 years by MCI and other regulatory councils is in their exclusive hands. Hence, NAAC should enhance the marks of health sciences, based on the marks obtained by the other disciplines, where university is free to carry out curriculum revision.

1.2.1 (Qn) (10 marks) : CBCS and electives are decided by the regulatory councils. It is not in the hands of any university. The solution to this is stated above in 1.1.2.

2.6.1 (QI) (10 marks): Not enough to have stand-alone ‘Learning Outcomes’. These must culminate into a system of assessment and evaluation by setting levels of attainment. The criteria is unnecessary and contradicts 1.1.1

Conclusion

It is therefore summed up that universities with health sciences have unfairly been subjected to wade through rough and muddy waters to score a top level accreditation grade. Universities with health sciences therefore, need mentoring and an expert to guide them to meet their accreditation goals. Institutes can rely on ‘NAAC consultants’ or ‘private experts’ from the list of ‘top accreditation consultants’ of India. ‘Accreditation Edge’, however, continues to remain a top NAAC consultancy in India, without a speck of doubt!

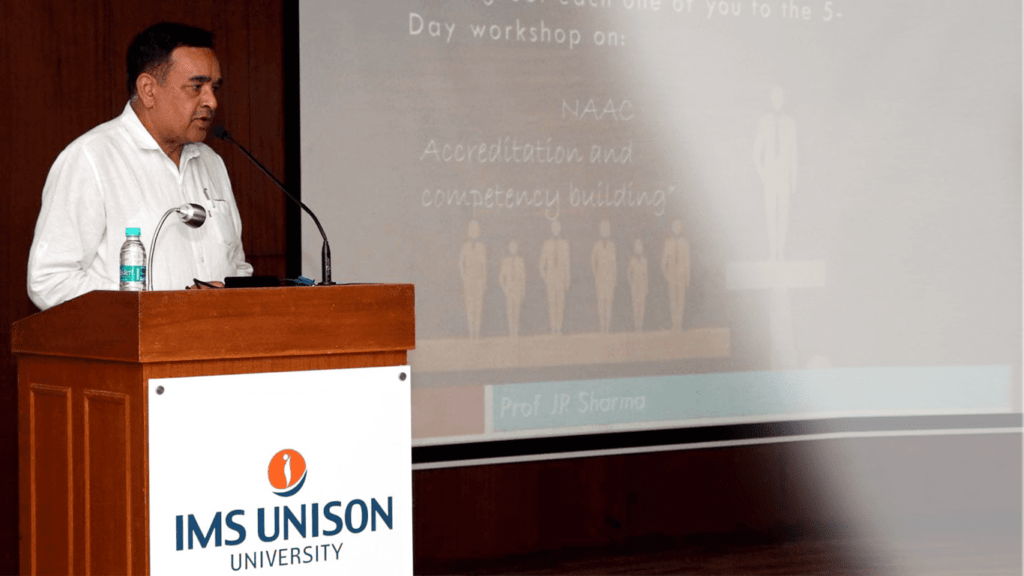

The writer, Prof JR Sharma is the Managing Director of STEMVOGEL and leads its enterprise Accreditation Edge providing support to HEIs in global best practices in STEM and accreditation.